According to Dr Jones from the Nature Journal, as the global population grows and even though we are still battling some of the pathogens that have been with us since the advent of known human history such as tuberculosis, we are also witnessing a trend in increasing emergence of novel pathogens from non-human hosts. This is posing a significant threat to public health. Within the last ten years, we witnessed the emergence of new viruses that could potentially spread across international borders and wreak global havoc, the latest of this being the novel coronavirus (COVID-19). The recent development of machine learning-based tools for healthcare providers allows novel ways to combat such global pandemics.

The term machine learning is defined by Oxford Academic as: encompasses the collection of tools and techniques for identifying patterns in data. In traditional methods of identifying patterns from data, we approach the system with our presumptions as to which components of the data (age, sex, pre-existing conditions) affect the outcome of interest (patient survival).

However, in machine learning, we provide data, and the machine identifies trends and patterns, enabling us to formulate a model to predict the outcome of patients. We will attempt to provide a narrative review of such tools, in details of how they are useful in healthcare, and how they are being utilized in the prediction, prevention, and management of COVID-19. We will also include discussions on such tools used in past infectious diseases such as the SARS-CoV-1 and MERS-CoV viruses and how they may be translatable to COVID-19.

Outbreak detection

Biosurveillance is the science of early detection and prevention of a disease outbreak in the community, stated by Dr Allen in his application book in 2018. Moreover, it is claimed by The online journal of public health informatics that Analytics, machine learning, and natural language processing (NLP) are being increasingly used in biosurveillance. Scanning social media, news reports, and other online data can be used to detect localized disease outbreaks before they even reach the level of pandemics. The Canadian company Blue Dot successfully used machine learning algorithms to detect early outbreaks of COVID-19 in Wuhan, China by the end of December 2019, as recorded by the Journal of Travel Medicine. As for big data analysis of medical records, as well as satellite imaging (e.g., cars crowding around a hospital), are some other ways big data analysis has been used in the past to detect localized outbreaks.

Google Trends has been used in the past to detect the outbreak of Zika virus infections in populations, using dynamic forecasting models. Sentiment analysis is the technique of using natural language processing in social media to understand the positive and negative emotions of the population, mentioned in the Journal of Public Health and Surveillance. These techniques of sentinel biosurveillance would help detect pandemics before they become one and can provide valuable time for the health system to prepare for prevention and management.

Prediction of spread

Various statistical, mathematical and dynamic predictive modelling has been used to predict the extent successfully and spread of infectious diseases through the population: Dr Sangwon Chae’s study, Risk assessment of Ebola virus in Uganda, Potential H7N9 pandemic improved response. As opposed to traditional epidemiological predictive models, big-data-driven models have the added advantage of adaptive learning, trend-based recalibration, flexibility and scope to improve based on a newer understanding of the disease process, as well as estimation of the impact of the interventions, such as social distancing, in curbing its spread.

The most common is the Susceptible-Exposed-Infectious-Recovered (SEIR) modelling method which is now being used to predict the areas and extent of COVID-19 spread. These techniques can also be used to determine other parameters of the epidemic, such as under-reporting of cases, the effectiveness of interventions, and the accuracy of testing methods.

However, a predictive model is only as good as the data it is based on, and in the event of a global pandemic, data sharing across communities is of paramount importance. This was one of the significant obstacles in learning about and modelling the 2013–2016 Ebola virus outbreak, according to Dr Moorthy and Dr Millette. The World Health Organization (WHO) has proposed a consensus on expedited data sharing on the COVID-19 outbreak to promote inter-community learning and analytics in this area.

Preventive strategies and vaccine development

In research by Journal of Bioinformatics and Computational Biology, Artificial Neural Networks (ANN) were used to predict antigenic regions with a high density of binders (antigenic hotspots) in the viral membrane protein of Severe Acute Respiratory Syndrome Coronavirus (SARS–CoV). This information is critical to the development of vaccines.

- Using machine learning for this purpose allows for rapid scanning of the entire viral proteome, allowing faster and cheaper vaccine development.

- Reverse vaccinology and machine learning were successfully employed to identify six potential vaccine target proteins in the SARS-CoV-2 proteome.

- Machine learning has also been used in the past to predict the strains of influenza virus that are more likely to cause infection in a population in an upcoming year, and in turn, should constitute the year’s seasonal influenza vaccine.

Early case detection and tracking

Early case identification, quarantining, and preventing exposure to the communities are crucial pillars in managing an epidemic such as COVID-19. Mobile phone-based surveys can be useful in early identification of cases, especially in quarantined populations. Such methods have shown success in Italy in identifying influenza patients through a web-based survey. As opposed to traditional methods of survey and analysis, the use of artificial intelligence tools can be used to collect and analyze large amounts of data, identify trends, stratify patients based on risk, and propose solutions to the population instead of the individual.

The government of India recently launched a mobile application called “Aarogya Setu” which tracks its users’ exposure to potentially COVID-19 infected patients, using the Bluetooth functionality to scan the surrounding area for other smartphone users. If a patient is tested positive, then the data from the mobile application can be used to track down every app-user whom the patient encountered, within the last 30 days.

- The close physical and economic proximity with China should have resulted in high morbidity and mortality due to COVID-19 in Taiwan.

- However, with the help of machine learning, they were able to bring the number of infected patients to far lower than what was initially predicted.

- They identified the threat early, mobilized their national health insurance database, and customs and immigration database to generate big data for analytics.

- Machine learning on this big data helped them stratify their population into lower risk or higher risk based on several factors, including travel history.

- Persons with higher risk were quarantined at home and were tracked through their mobile phones to ensure that they remained in quarantine.

- This application of big data, in addition to active case finding efforts, ensured that their case numbers were far fewer than what was initially anticipated, and recorded in the COVID 19 official resource centre.

Prognosis prediction

Machine learning algorithms have been used previously to predict prognosis in patients affected by the MERS Cov infection. The patient’s age, disease severity on presentation to the healthcare facility, whether the patient was a healthcare worker, and the presence of pre-existing comorbidities were the four factors that were identified to be the major predictors in the patient’s recovery. These findings are consistent with the currently observed trends in the COVID-19 disease, as claimed by a study on the Lancet Journal.

Treatment development

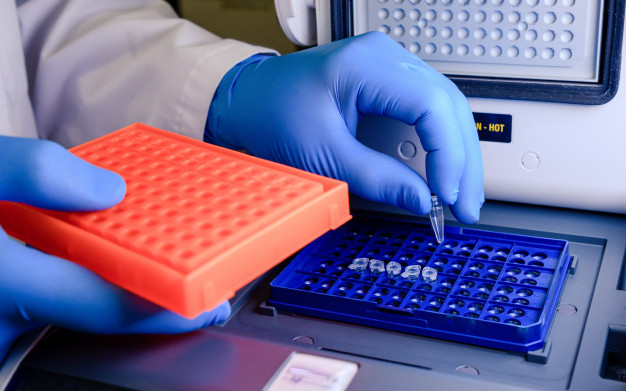

Machine learning tools have been used in drug development, drug testing, as well as drug repurposing. They enable us to interpret large gene expression profile data sets to suggest new uses for currently available medications. Deep generation models, also known as AI imagination, can design novel therapeutic agents with the possible desired activity. These tools help reduce the cost and time of developing drugs, help in developing novel therapeutic agents, as well as predict possible off-label uses for some therapeutic agents, said by Dr Clémence Réda in her latest research.

Daviteq inputs about Machine Learning application

Machine learning provides an exciting array of tools that are flexible enough to allow their deployment in any stage of the pandemic. With the large amount of data that is being generated while studying a disease process, machine learning allows for analysis and rapid identification of patterns that traditional mathematical and statistical tools would take a long time to derive.

The flexibility, ability to adapt based on a new understanding of the disease process, self-improvement as and when new data becomes available, and the lack of human prejudice in the approach of analysis makes machine learning a highly versatile novel tool for managing novel infections.

However, with such an enhanced ability to derive meaning from large amounts of data, there is a greater demand for higher quality control during the collection, storage, and processing of the data. Besides, standardization of data structures across populations would allow these systems to adapt and learn from data across the globe, which was not possible in the past, and is even more important in learning and managing a global pandemic like COVID-19. Besides, with a new pandemic, there tends to be a lot more “noise” in the data, and hence blindly feeding this immature data, which is ridden with outliers into an AI algorithm should always be approached with caution.

wireless vibration sensor Daviteq

Tiếng Việt

Tiếng Việt